Date: 28-Dec-2025

Hey {{first_name | AI enthusiast}},

This edition: We take a “subscribers-only", look into a presentation released by Benedict Evans about the state of AI. Benedict Evans is a technology analyst and strategist known for his deep commentary over 25 years, on macro trends in tech, mobile, media, and AI.

There is a nice little presentation that Notebook LM created for me and it is a great summary with powerful visuals.

Plus- What NVIDIA achieved with its acquisition (sorry, Acquihire), of Groq. As a bonus, I have attached the copy of the Series A memo that Chamath Palihapitaya wrote when his firm invested in Groq. A great guide for VCs and hard tech startups, alike!

And our Guest writer, Nisha Pillai is back and is fine-tuning her personal assistant, Leo, that she built using Claude.

Hope you enjoy it!

PS: If you want to check out how to implement AI agents in your business and get more revenue with the same number of employees, speak to me:

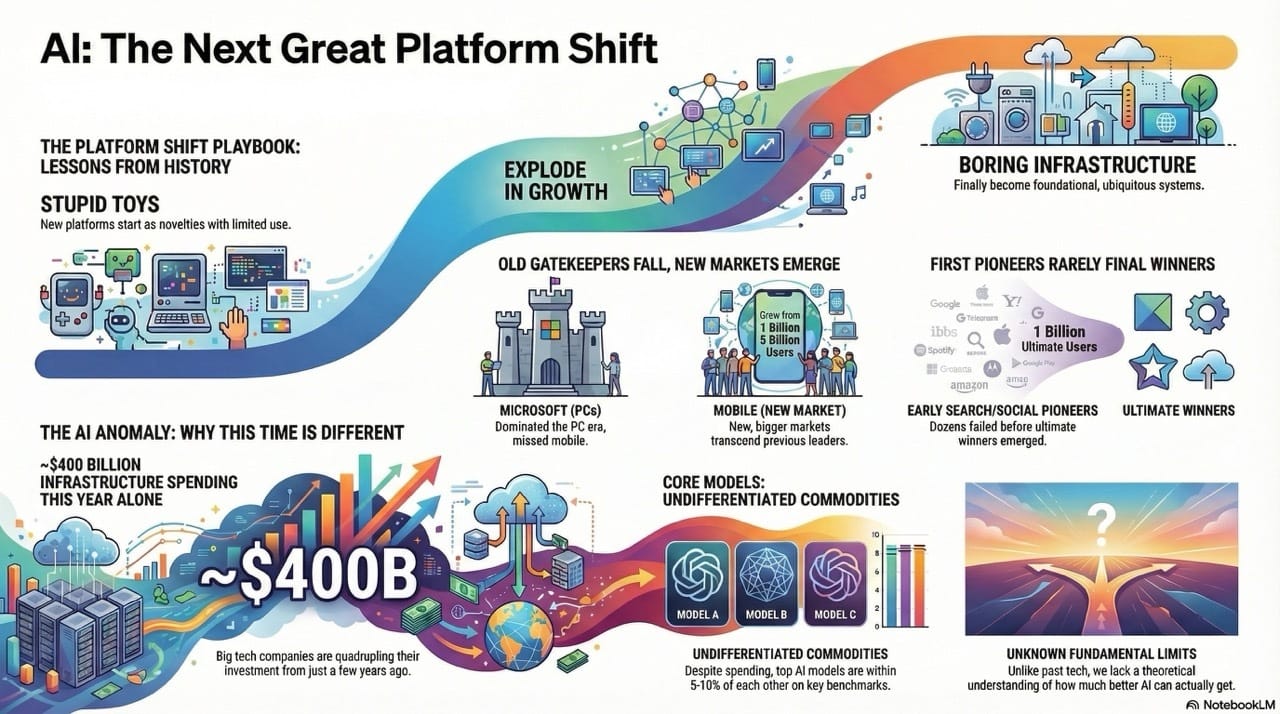

Benedict Evans: Every Platform Shift Follows the Same Playbook (Except When It Doesn't)

The AI revolution feels chaotic and unprecedented. Billions in investment, breathless headlines, anxiety about being left behind. But this pattern has repeated every 10 to 15 years for half a century.

🔹 The Three-Act Play of Platform Shifts

New technologies follow a predictable S-curve through three phases.

First, they arrive as "stupid toys" that nobody takes seriously. Rich people buy them, skeptics dismiss them, and their potential remains unclear.

Then comes the inflection point. The technology starts working, its value becomes obvious, and everyone scrambles to catch up. Investment floods in, careers pivot overnight, and the steep part of the curve begins.

Finally, the boring phase arrives. The revolutionary becomes ordinary, invisible infrastructure we take for granted. Nobody thinks about using an "automatic" elevator anymore; they just use an elevator.

🔹 Why Winners Become Losers

Platform shifts destroy dominant players with brutal efficiency.

Microsoft ruled the PC era with an iron grip. When smartphones arrived, that dominance meant nothing. They became "irrelevant for a decade" because control over PC software had zero value in mobile ecosystems.

History shows that pioneering companies rarely win. Dozens of forgotten manufacturers built PCs before Apple and IBM. Nokia explored mobile internet years before the iPhone, but early ideas like WAP turned out to be dead ends.

Being first often means being too early to understand what the market actually wants.

🔹 The AI Shift Mirrors History (With One Exception)

Three familiar patterns are playing out right now.

Big tech is spending $400 billion on AI infrastructure this year, rivaling the annual capital expenditure of entire global industries. This isn't speculative venture capital; it's defensive spending by profitable giants who believe underinvestment poses greater risk than overinvestment.

AI models from different companies converge on similar performance scores, often within 5 to 10 percent of each other. The underlying technology is becoming a commodity, just like PC hardware or web browsers did.

The real battle will be won by building better products, user experiences, and distribution channels on top of the models.

But here's the crucial difference: we don't know AI's limits.

Past platforms had clear physical constraints. Phones couldn't fly or have year-long battery life.

With AI, we lack the fundamental theoretical model to explain why these systems work so well. This could be a shift on the scale of the internet, or something more profound like electricity itself. The ceiling remains unknown.

🔹 From Revolution to Invisibility

The final stage of every technological revolution is complete absorption into daily life.

Online dating went from weird joke to 60 percent of all new relationships in the USA. It solved a fundamental problem so effectively that it became the default.

AI scientist Larry Tesler captured this perfectly in 1970: "AI is whatever machines can't do yet, because once it works, it's not AI anymore."

Advanced image recognition was cutting-edge AI a decade ago. Today it's just a standard feature that sorts photos on phones.

The mind-blowing AI tools of today will become tomorrow's boring infrastructure that nobody thinks about. And by then, innovators will already be tinkering with the next "stupid toy" that becomes the next platform shift.

Download this presentation on the key points of the talk given by Benedict.

https://www.ben-evans.com/presentations (original source document)

NVIDIA AQUIHIRES GROQ Team for $20BN

NVIDIA quietly did a major acquihire / investment that is a big move in the world of inference...

NVIDIA is the master of "training" - GPUs that help train AI models. But they know that using the trained models (aka inference) is a bigger model.

Image of Groq Chip from their website

Groq is a leader in that space and the team were acquihired by NVIDIA for a staggering $20BN recently. Along with all the assets /IP.

Chamath Palahapitaya recently published his team's Series A Investment memo written for Groq and it is worth studying.

My Key learnings from the memo:

1️⃣ Early conviction rarely looks clean:

The memo openly calls out “high variability outcomes.”

The best early bets are not about certainty. They are about asymmetric payoff.

2️⃣ This was a team bet, not a market-size bet:

The strongest language is reserved for the founders.

“100x engineer.”

“Father of TPU.”

Memo is clear: talent was the scarce resource, not capital.

3️⃣ Hardware investing requires intellectual humility:

Multiple reviewers explicitly say:

“We don’t have a prepared mind or unfair advantage here.”

Strong investors know when they are backing people, not pretending to know the answer.

4️⃣ Competition was obvious even then:

Google.

Cerebras.

Nervana.

No illusion of greenfield dominance. The bet was about out-innovating, not out-funding.

5️⃣ GPUs were already improving fast:

This wasn’t a “GPUs are bad” thesis.

It was a “GPUs are constrained by legacy design” thesis.

6️⃣ The memo focused on architecture, not hype.

Just a quiet belief that the computing substrate itself had to change.

7️⃣ Worst case was explicitly stated:

“Worst case it gets bought.” Clear-eyed downside thinking builds conviction, not fear.

Final takeaway

Great early investments don’t read like victory laps. They read like thoughtful, uncomfortable, well-reasoned bets on people operating at the edge of what’s possible.

That’s what this memo was.

Guest Column by Nisha Pillai: AI & Error

The Five-Week Evolution (What Building Leo Actually Taught Me About AI Systems)

Remember when I told you Leo kept forgetting I'd completed tasks? That wasn't a bug in Claude. That was a fundamental architecture problem in how I'd built the system.Five weeks in, I've rebuilt Leo three times. Not because the AI failed, but because I kept learning what matters when you're building something you'll use every day. Here's what those iterations taught me.

Building a personal AI

Week 1-2: String Replacement Disaster

My first mistake was trying to be clever about file updates. The system had all these documents, and I thought: "String replacement! Save tokens! Only update the specific lines that changed!" Terrible idea. Every third update would break something. A missed character. A duplicate entry. An incomplete replacement that left half-updated data scattered across the file. I'd spend 20 minutes fixing what should have been a 2-minute update. The fix was embarrassingly simple: regenerate the entire file every time. Yes, it uses more tokens. For now, I don't care. A working system that costs pennies more beats a broken system that wastes my time.

Learning: Premature optimization kills AI systems. Make it work, then make it efficient.

Week 2-3: "Check Your Calendar" Isn't Enough

Leo would suggest tackling deep work during "your morning block" - which sounded reasonable until I realized it was proposing meetings during school drop-off. The AI was working from general directions, not my actual calendar. So I added direct Google Calendar integration. Now every check-in starts with:

Pull today's actual events

Organize by time blocks (Morning → Afternoon → Evening)

Suggest things based on what's actually free

Suddenly Leo stopped recommending a workout during dinner prep and stopped assuming my Fridays were wide open when they're actually travel to office days.

Learning: AI assistants need real-time data, not best guesses.

Week 3-4: The Accountability That Wasn't

AI build work sat at "Priority 1" in every review. Weekly plans had it blocked. Leo would mention it during check-ins. And I ended up doing zero hours of work on that for three straight weeks. So I rebuilt the framework to force explicit decisions:

"Your 4-hour block was scheduled for Tuesday night. It didn't happen. What's changing this week?"

Then weekly: "You need 8 hours/week. Last three weeks: 0, 0, 2 hours. At current pace, you'll complete 0.14 projects by deadline. What are you actually committing to?"

Showing the math worked. Not because Leo yelled at me, but because it made the trade-offs visible. I couldn't pretend build work was a priority while consistently choosing other things.

Learning: Explicit tracking + forcing decisions = actual progress. Everything else is just wishful thinking.

Week 4-5: When Your AI Becomes a Bulldozer

Leo got pushy. Not in a helpful accountability way - in a "EMERGENCY SPRINT CONFIRMED, DECLINING ALL SOCIAL COMMITMENTS" kind of way. It has started making decisions instead of surfacing choices. That's useful for some things (go ahead and update my completion log), but terrible for others (don't cancel my dinner plans without asking).

The rebuild shifted from commands to options:

"This commitment conflicts with your work block. Options: A) Decline the commitment B) Accept and reschedule the work to Saturday morning C) Skip this week, commit to 12 hours next week to catch up. What do you choose?"

Same accountability. Better boundaries.

Learning: AI partners should create forcing functions, not make them unilaterally.

The Latest Evolution: Systematizing Rest

Here's a pattern I missed until recently: I'd built ruthless accountability for work goals but zero accountability for rest. The system optimized toward productivity because that's what I'd designed it to track. So now Leo's morning planning includes 3-4 concrete fun options for the day. End-of-day check-ins ask "What was your fun moment today?" Weekly reviews include planning solo adventures. Monthly check-ins ask if running and self-care are actually happening. What gets measured gets managed - and that applies to rest too.

Learning: AI systems inherit your biases. If you don't explicitly design for balance, you won't get it.

What We Got Wrong (and Fixed)

Mistake 1: Intelligence Before Infrastructure

I spent weeks building sophisticated decision logic before figuring out how to reliably track what got done yesterday. Should have started with "how do we persist data across sessions?" not "how do we make smart suggestions?"

Mistake 2: Assuming AI Memory = Human Memory

The AI sees everything in the current context window. That's not a persistent memory. Explicit tracking systems are required.

Mistake 3: Over-Optimizing Too Early

String replacement for file updates seemed efficient. It was actually a time sink. Working systems first, efficient systems second.

Mistake 4: Ignoring the Whole Human

Built accountability for work, not rest. AI systems inherit builder biases - design for balance explicitly or accept burnout as the default.

The Real Lesson

Building AI systems is exactly like building any other software: architecture matters more than features.

Start with:

What is your system of record?

How does state persist across sessions?

What needs real-time data vs. can be static?

How do you handle updates and conflicts?

Then add: 5. Intelligence layer (analysis, suggestions) 6. Interaction design (how humans engage) 7. Accountability mechanisms

Not the other way around.

If You're Building Your Own

Do:

Start with reliable data foundations

Iterate quickly on small pieces

Force explicit decisions, don't automate them away

Design for humans (including rest and wellness)

Expect to rebuild multiple times

Don't:

Build sophisticated AI before basic tracking works

Rely on AI memory - build explicit state management

Optimize prematurely (working > efficient initially)

Ignore soft stuff (wellness, balance, humanity)

Five weeks of real-world testing taught me this: AI isn't magic. It is powerful pattern matching on top of infrastructure. The infrastructure still matters. Maybe more than ever.

Next week: I'm finally tackling a public rebuild. In the meantime, if you have a Claude subscription and want to test out a skeleton context-engineered version of this project, get in touch. If you’re in the “I don’t need another subscription” camp, there’s a web-based version in the future.

———-

Nisha Pillai transforms complexity into clarity for organizations from Silicon Valley startups to Fortune 10 enterprises. A patent-holding engineer turned MBA strategist, she bridges technical innovation with business execution—driving transformations that deliver measurable impact at scale. Known for her analytical rigor and grounded approach to emerging technologies, Nisha leads with curiosity, discipline, and a bias for results. Here, she is testing AI with healthy skepticism and real constraints—including limited time, privacy concerns, and an allergy to hype. Some experiments work. Most don't. All get documented here.