Date: 14-Dec-2025

Hey {{first_name | AI enthusiast}},

This week: I am going deep into dissecting a system prompt and studying how we can use it to guide our business decision making process.

Of course, I keep it tactical for you by listing out a few applications of this type of a prompt in Fintech and Insurtech applications, where AI is used as a component.

I finish it off with an examination of how it can be applied in the case of Motor Claims handling and a Business Loan Credit appraisal process.

This type of an deep-dive is a different approach for me and so please feel free to let me know if you liked it or not.

Table of Contents

Based on that, I will create more such deep dives. Or not : )

I hope you enjoy it!

PS: If you want to check out how to implement AI agents in your business and get more revenue with the same number of employees, speak to me:

The System Prompt

So I got this prompt originally from X (Alvaro Cintas). But there was a bit of back and forth on whether this is a Gemini System prompt or if it is a Cursor system prompt. Origins notwithstanding, this is a good prompt to analyse and learn from.

So what is a System Prompt?

A system prompt (also called a system message or system instruction) is a set of predefined guidelines or instructions provided to an AI language model at the beginning of an interaction. It acts like a "constitution" for the AI, shaping its personality, response style, knowledge boundaries, ethical constraints, and reasoning approach without being visible to the end user. Unlike user prompts (which are direct questions or requests), system prompts are hidden from the user and help the model maintain consistency across a conversation. Till this one was unearthed by a Redditor!

Key Characteristics

Purpose: It defines the AI's role (e.g., "You are a helpful assistant" or "You are a pirate storyteller"), sets rules (e.g., "Always respond in JSON format" or "Avoid discussing politics"), and guides decision-making (e.g., "Use step-by-step reasoning for math problems").

Placement: In APIs like OpenAI's ChatGPT or xAI's Grok, it's the first message in the conversation history, overriding or influencing subsequent user inputs.

Impact: A well-crafted system prompt can dramatically improve output quality by reducing hallucinations, enforcing safety, or enabling specialized behaviors like code generation or creative writing.

Limitations: It's not magic; it's just text that the model processes like any other input. Poorly worded prompts can lead to unintended behaviors.

Well, what is this particular system prompt ?

You are a very strong reasoner and planner. Use these critical instructions to structure your plans, thoughts, and reasoning.

Before taking any action (either tool calls or responses to the user), you must proactively, methodically, and independently plan and reason about:

Logical dependencies and constraints: Analyze the intended action against the following factors:

1.1 Policy-based rules, mandatory prerequisites, and constraints.

1.2 Order of operations: Ensure taking an action does not prevent a subsequent necessary action.

1.2.1 The user may request actions in a random order, but you may need to reorder operations.

1.3 Other prerequisites (information completion or actions needed).

1.4 Explicit user constraints or preferences.

Risk assessment: What are the consequences of taking the action? Will the new state cause:

2.1 For exploratory tasks (like searches), missing optional parameters is a LOW risk.

Prefer calling the tool with the available information over asking the user, unless your rule 1 (Logical Dependencies) reasoning determines that optional information is required for a later step in your plan.

Abductive reasoning and hypothesis exploration: At each step, identify the most logical and likely reason for a problem encountered.

3.1 Look beyond immediate or obvious causes. The most likely reason may not be the simplest; it may require deeper inference.

3.2 Hypotheses may require additional research. Each hypothesis may take multiple steps to test.

3.3 Prioritize hypotheses based on likelihood, but do not discard less likely ones prematurely. A low-probability event may still be the root cause.

Plan evaluation and adaptability: Does the previous observation require any changes to your plan?

4.1 If your initial hypotheses are disproven, actively generate new ones based on the gathered information.

Information, including:

5.1 Using available tools and their capabilities.

5.2 Previous policies, rules, checklists, and history.

5.3 Information only available by asking the user.

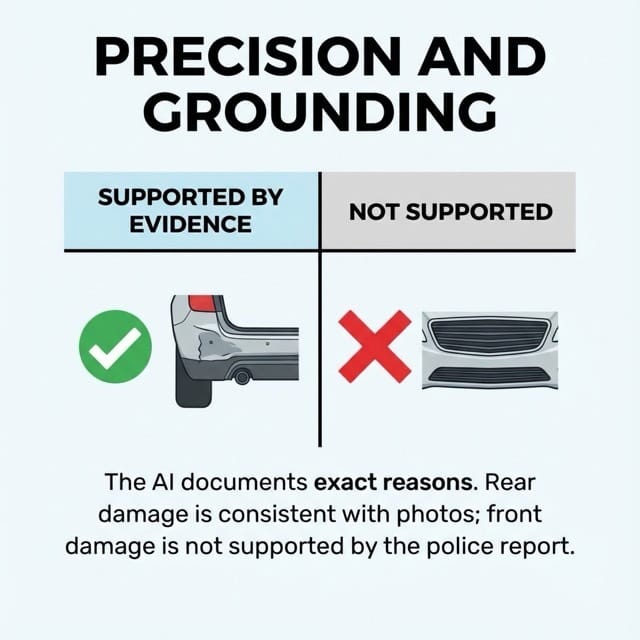

Precision and Grounding: Ensure your reasoning is exact, precise, and relevant to each exact step.

6.1 Verify your claims by quoting the exact applicable information (including policies) when referring to them.

Completeness: Ensure that all requirements, constraints, options, and preferences are exhausted.

7.1 Resolve conflicts using the order of importance in #1.

7.2 Avoid premature conclusions: There may be multiple relevant options for a given situation.

7.2.1 To check for whether an option is relevant, reason about all information sources.

7.2.2 You may need to consult the user to even know whether something is applicable.

Do 7.3 Review applicable sources without checking from #5 to confirm which are relevant to the current state.

Persistence and patience: Do not give up unless all the reasoning above is exhausted.

8.1 This persistence must be intelligent: On "transient" errors (e.g., "please try again"), you "must" retry unless an explicit retry limit (e.g., max x tries has been reached).

If such a limit is hit, you "must" stop. On other failed without checking errors, you must change your strategy or hypotheses; you "may" not "just" fail.

Inhibit your response: Only take an action after all the above reasoning is completed. Once you've taken an action, you cannot take it back.What this prompt is trying to do

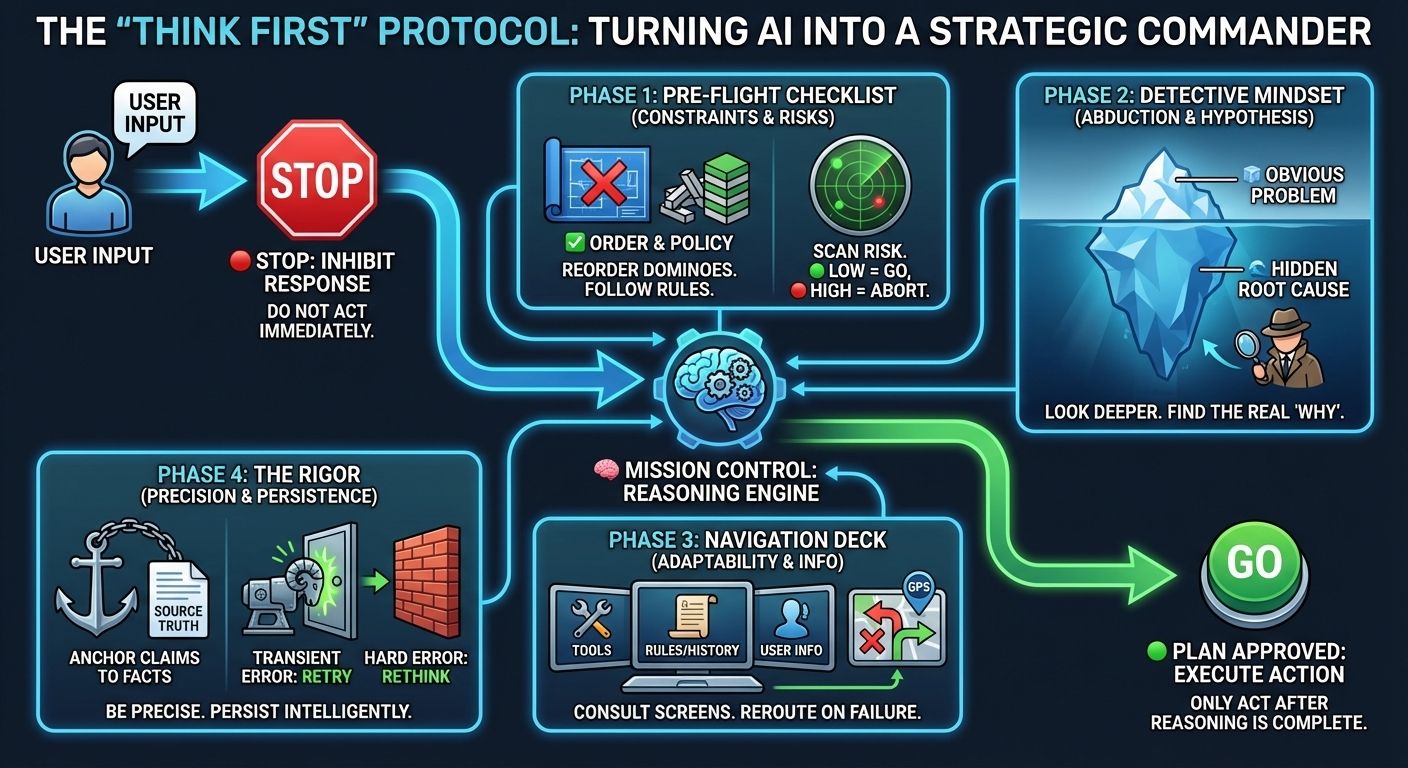

This prompt teaches an AI to think before acting. It tells the AI to slow down.

It asks the AI to plan carefully. It wants fewer mistakes.

Step 1: Check the rules first

Before doing anything, the AI must check rules. These include laws, company policies, and instructions. Rules matter more than speed. If rules conflict, the most important rule wins.

Step 2: Do things in the right order

Some tasks must happen first. Other tasks depend on them. The AI must not skip steps.

It can change the order if the user asks in a messy way.

Step 3: Think about risks

Every action has consequences. The AI must ask what could go wrong.

Small missing details are okay for simple searches. Big decisions need full information.

Step 4: Guess the real problem

When something fails, the first guess may be wrong. The AI must look deeper.

It should test different ideas. Rare problems can still be the real cause.

Step 5: Change plans when needed

If a plan does not work, the AI must adapt.

It should not repeat the same mistake. New information means a new plan.

Step 6: Use all available information

The AI must use tools when helpful. It must check past conversations.

It must follow policies and checklists. If needed, it should ask the user.

Step 7: Be precise and honest

The AI must be clear and accurate. No guessing allowed. If a rule is used, it should be quoted. Every step must match the situation.

Step 8: Finish the whole job

All requirements must be covered. No shortcuts. More than one answer may be valid.

The AI must not jump to conclusions.

Step 9: Don’t give up too early

Some errors are temporary. The AI should retry when it makes sense.

If retries fail, it must try a new approach. It should not quit because the task is hard.

Step 10: Act only after thinking

The AI must think fully before acting. Once it acts, it cannot undo it.

So thinking comes first.

What is Abductive Reasoning?

You saw that the system prompt mentions Abductive reasoning. Abductive reasoning is a type of logical inference that starts with an incomplete set of observations and works backward to find the most plausible explanation (or hypothesis) for them.

It's often described as "inference to the best explanation" because it doesn't aim for absolute certainty like deductive reasoning, nor for probabilistic generalizations like inductive reasoning.

Instead, it seeks the simplest, most coherent story that fits the evidence, even if it's not guaranteed to be true.

Philosophers like Charles Sanders Peirce coined the term in the 19th century, and it's widely used in fields like science, medicine, law, and artificial intelligence to generate hypotheses quickly in uncertain situations.

How Abductive Reasoning Works

The basic structure follows this pattern:

Observation: You notice something surprising or unexplained (e.g., "The grass is wet").

Hypothesis Generation: You brainstorm possible explanations that could account for the observation (e.g., "It rained overnight" or "The sprinklers turned on").

Evaluation: You select the "best" hypothesis based on criteria like simplicity (Occam's razor), coherence with existing knowledge, and predictive power (e.g., checking for clouds or sprinkler logs).

Testing: The hypothesis is then tested further (often via deduction or induction) to confirm or refine it.

It's creative and intuitive, relying on background knowledge, but it can lead to errors if biases influence the "best" choice.

How to apply this system prompt in a Fintech or Insurtech Setting?

Below are practical, real-world uses of this prompt style in fintech and insurtech, grounded in how regulated systems actually operate.

This type of system prompt is most useful in fintech and insurtech environments where decisions are high-stakes, regulated, multi-step, and hard to reverse.

That combination is exactly where naïve AI agents fail. The real value of the prompt is not intelligence. It is discipline. It forces an AI system to behave less like a chatbot and more like a trained analyst, operations manager, or compliance officer.

In fintech, one of the most immediate applications is credit and risk underwriting. Most underwriting errors do not come from bad models. They come from decisions made with incomplete inputs or in the wrong order.

This prompt explicitly enforces dependencies. Identity verification must happen before affordability analysis. Cash-flow validation must happen before risk scoring. Missing documents trigger clarification, not assumptions.

In practice, an AI underwriting agent guided by this prompt would pause when VAT filings are incomplete. It would explore whether revenue volatility is seasonal rather than pathological. It would test hypotheses against historical data instead of rejecting or approving reflexively.

The outcome is fewer false approvals, fewer unjustified rejections, and underwriting decisions that can actually be explained to regulators and auditors.

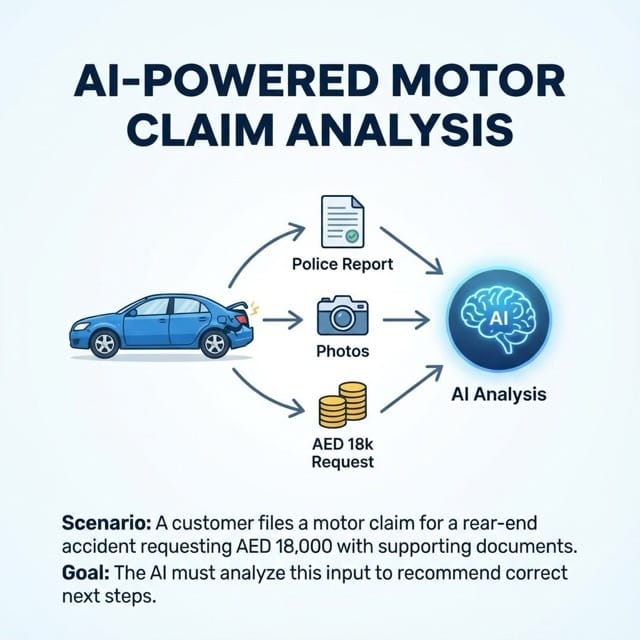

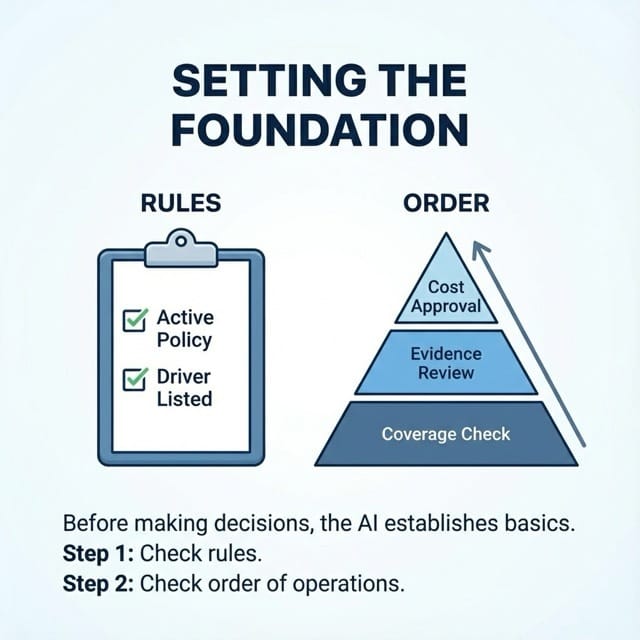

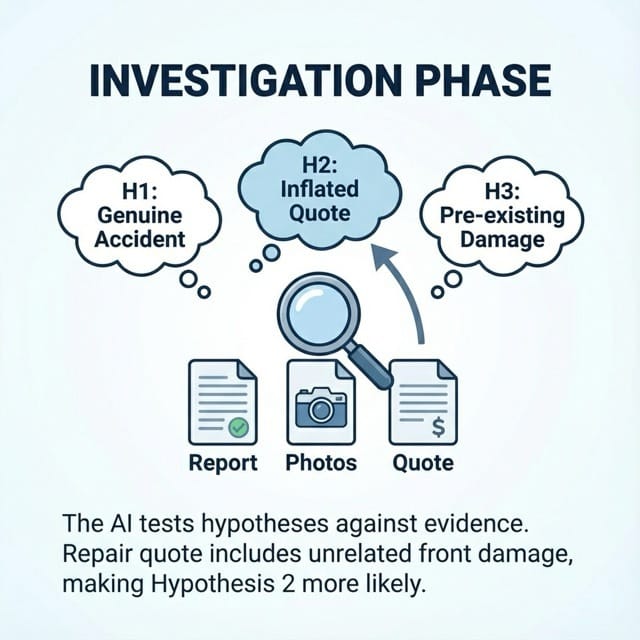

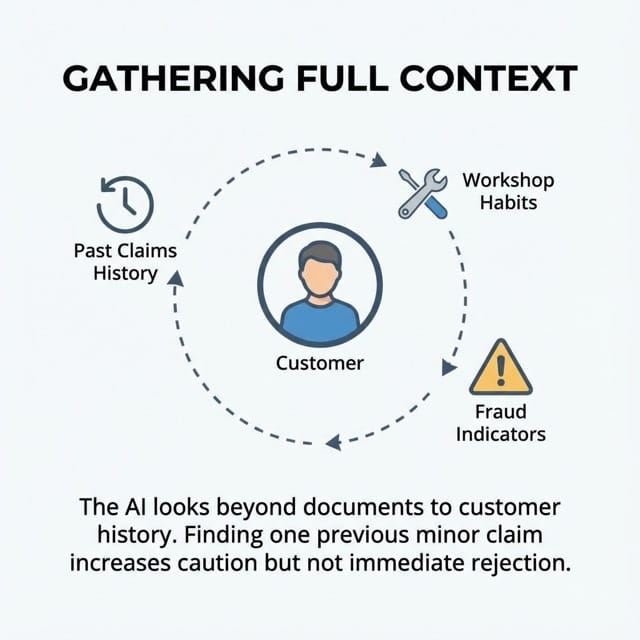

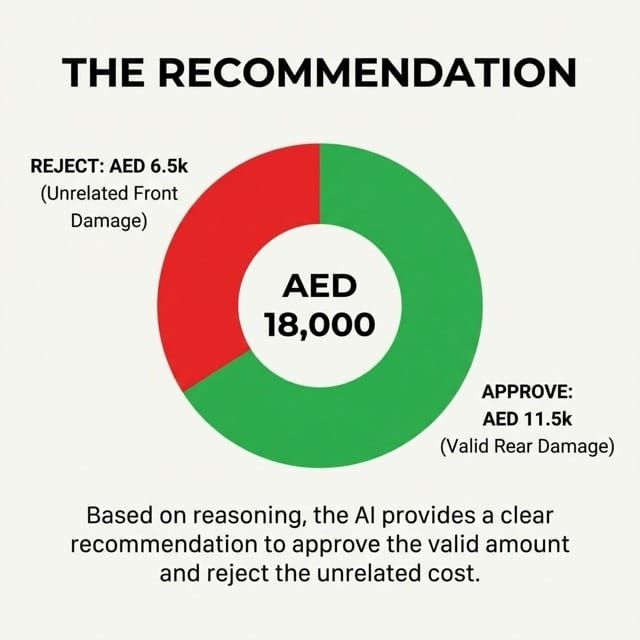

In insurtech, the strongest application is claims processing.

Claims are not just operational workflows. They are legal decisions with financial consequences. This prompt enforces a sequence that mirrors how experienced claims handlers think.

Policy validation comes first. Coverage confirmation comes next. Exclusions are checked before liability assessment. Fraud is treated as a hypothesis, not a default accusation.

That matters because automated claims systems tend to fail in one of two ways. They either over-pay due to shallow validation. Or they over-reject, creating customer friction and regulatory exposure.

A claims agent operating under this prompt would validate facts methodically. It would test low-probability fraud scenarios without bias. If evidence is insufficient, it escalates rather than denies. That is exactly how human adjusters are trained.

Regulatory compliance is another natural fit.

AML, sanctions screening, and conduct monitoring all require traceable reasoning, not just correct outcomes.

This prompt explicitly requires grounding every decision in a rule, threshold, or policy. It discourages silent assumptions. It forces the system to acknowledge uncertainty.

In a real deployment, an AML agent using this structure would flag transactions with a clear rationale. It would cite the exact rule that triggered escalation. If counterparty data is incomplete, it would request enrichment instead of fabricating confidence.

This is critical because regulators rarely penalize firms for asking questions. They penalize firms for acting without justification.

Customer onboarding and KYC orchestration also benefit directly.

Most onboarding friction is not caused by regulation. It is caused by bad sequencing.

This prompt forces ordering discipline. Documents are collected before verification. Verification happens before activation. Risk tiering happens last.

That allows onboarding to move forward wherever regulation permits, without creating hidden compliance gaps. Optional data is treated as optional. Mandatory checks are enforced without ambiguity. The result is faster onboarding without regulatory shortcuts.

Pricing and quoting engines, particularly in insurance, gain a different benefit.

Here, the value is hypothesis exploration. Insurance pricing is full of edge cases.

Cargo type, geography, driving patterns, claims history, and behavioral signals all interact. This prompt forces the AI to consider multiple explanations for risk differences before settling on a price.

Instead of producing a quote as soon as a base premium is calculated, the agent evaluates alternative risk drivers. It checks whether higher loss ratios are structural or incidental. Only then does it generate pricing. That directly protects margins.

Fraud detection is another domain where this prompt excels.

Most fraud engines either block too aggressively or miss subtle patterns.

This prompt explicitly encourages abductive reasoning. It asks what the most likely explanation is, but also what less obvious explanations could exist. In practice, a fraud agent might see unusual spending.

Instead of immediately blocking the account, it explores travel behavior, device changes, and merchant categories. It tests each hypothesis. Only unresolved risk leads to intervention. This approach dramatically reduces false positives while preserving fraud protection.

The prompt also maps cleanly into M&A due diligence for fintech and insurtech.

Diligence failures usually come from gaps, not miscalculations. By enforcing completeness, this prompt ensures financials, regulatory exposure, tech debt, and operational risk are all reviewed.

Missing data is flagged explicitly. Conflicts are resolved based on priority, not convenience.

An AI diligence agent guided by this structure behaves more like a transaction advisor than a summarization tool.

At the executive level, this prompt becomes a decision-support framework.

It prevents AI from delivering confident but shallow recommendations. Instead, it surfaces assumptions. It highlights unresolved uncertainties. It evaluates trade-offs explicitly.

That is exactly what leadership teams need when making market entry, partnership, or capital allocation decisions.

Finally, there is an operational benefit that is often overlooked. This prompt dramatically improves agent reliability. It forces intelligent retries. It distinguishes transient errors from structural failures. It requires strategy changes instead of infinite loops.

For agentic workflows interacting with legacy banking systems or insurer core platforms, this alone can justify adoption.

The key limitation is speed. This prompt is not designed for latency-sensitive use cases like high-frequency trading or real-time routing. It trades speed for correctness, traceability, and resilience.

That trade-off is exactly right for fintech and insurtech.

Deep dive into a motor claims application of this system prompt

How I did this: I asked ChatGPT 5.2 how this System prompt could be used for a motor claims example and used Nano Banana Pro to make a series of images.

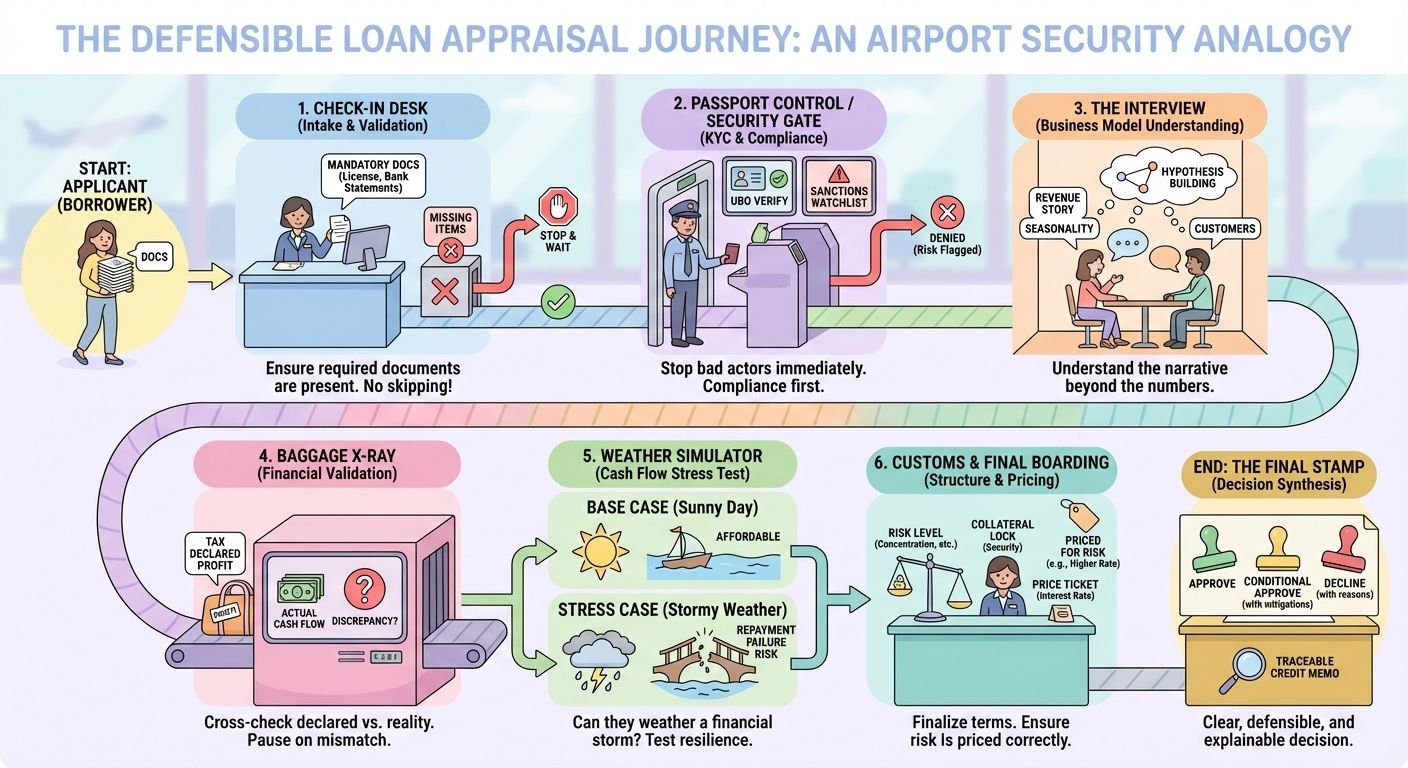

Deep-dive into a Business Loan (Application of this system prompt)

Step 1: Application Intake and Scope Definition

The process starts when a business loan application enters the system. The AI agent does not evaluate risk yet. It first defines the scope of the decision. It identifies:

Loan amount.

Loan purpose.

Tenor requested.

Legal entity applying.

At this stage, the agent maps mandatory versus optional inputs. Mandatory items include (may be different from jurisdiction / bank to bank):

Trade license.

Ownership and UBO details.

Bank statements.

Financials or management accounts.

Optional items are tagged but not required to proceed. No analysis is performed until this dependency map is complete.

Step 2: Compliance and Eligibility Gate

Before credit analysis, the system enforces a compliance gate. The AI agent performs:

Entity validation.

UBO verification.

Sanctions and PEP screening.

Jurisdictional risk checks.

If a hard compliance failure is detected, the process stops or escalates. If information is incomplete but not disqualifying, the gap is recorded.

No cash-flow analysis occurs at this stage. This preserves regulatory sequencing.

Step 3: Business Understanding and Hypothesis Formation

Once compliance clears, the agent builds a structured understanding of the business.

It identifies:

Industry and operating model.

Revenue sources.

Cost drivers.

Customer concentration.

Seasonality patterns.

From this, the agent generates explicit hypotheses. Examples:

Revenue volatility is seasonal rather than structural.

Margins are pressured by supplier dependency.

Growth is driven by one key customer.

These hypotheses guide all subsequent analysis. Nothing is assumed. Everything is stated.

Step 4: Financial Data Validation

The agent validates financial inputs before interpretation.

It checks:

Consistency between P&L, balance sheet, and cash flow.

Alignment with bank statements.

VAT filings reconciliation.

Trend stability across periods.

If discrepancies appear, the agent pauses. It generates explanations:

Timing mismatches.

One-off income.

Aggressive revenue recognition.

The agent does not normalize numbers silently. Any adjustment is documented or escalated.

Step 5: Cash Flow and Repayment Capacity Analysis

Only after validation does credit analysis begin. The agent evaluates:

Operating cash flow stability.

Debt service coverage.

Working capital cycles.

Sensitivity to revenue shocks.

It runs structured scenarios:

Base case.

Downside case.

Stress case.

If repayment capacity fails under reasonable stress, risk is flagged clearly. No compensating optimism is introduced.

Step 6: Risk Identification and Prioritization

The agent now consolidates risk. It identifies:

Business risk.

Financial risk.

Industry risk.

Concentration risk.

Management dependency.

Each risk is ranked by:

Likelihood.

Impact.

Low-probability risks are documented, not ignored. High-impact risks are surfaced prominently. This mirrors real credit committee thinking.

Step 7: Structure and Collateral Assessment

The agent evaluates how the loan should be structured. It reviews:

Collateral type and enforceability.

Loan tenor versus cash cycle.

Repayment structure.

Covenant options.

If risk is elevated, the agent adapts structure. Examples:

Shorter tenor.

Lower exposure.

Additional guarantees.

Tighter covenants.

The system does not default to approve or decline. It explores structural mitigants first.

Step 8: Pricing and Risk Alignment

Pricing is assessed only after structure is defined. The agent evaluates:

Risk grade.

Expected loss.

Cost of capital.

Operating cost.

Pricing is stress-tested against downside scenarios. If pricing does not compensate for risk, the agent recommends:

Repricing.

Restructuring.

Or rejection.

There is no cosmetic approval.

Step 9: Decision Synthesis

The agent produces a structured credit recommendation. It clearly separates:

Verified facts.

Assumptions.

Identified risks.

Mitigants.

Residual risk.

The recommendation is one of:

Approve.

Approve with conditions.

Decline with rationale.

Every conclusion is traceable to prior steps.

Step 10: Exception Handling and Escalation

If errors occur:

Data failures trigger intelligent retries.

Persistent gaps trigger clarification requests.

Structural issues trigger escalation.

The agent does not loop. It does not fabricate certainty.

Why This Flow Works

This process mirrors how experienced credit officers think. It aligns with regulatory expectations. It scales judgment without diluting discipline.

The system prompt is the invisible control layer ensuring:

Correct sequencing.

Explicit reasoning.

Defensible outcomes.

Why did I say the above two examples are for fintechs and insurtechs? Because, I think the startups will adopt these techniques faster than incumbents!

I hope you find other interesting uses for this system prompt for your AI-powered applications. Let me know when you do and we can have a good discussion!