Date: 11-Jan-2025

Hey AI enthusiast,

As the year starts, I asked myself: who is going to win the AI race? Is it the country with the most GPU chips (ie Compute) or with the most talented resources to use those chips. Or is it the country with the most access to power (to power those data centers?).

My research led to two pieces of research that shed light on the AI chip company that is winning now (no surprise here, but the scale surprised me!).

Talent however, is a different game and although US and China are in the news for leading the AI race at least in terms of cutting edge AI models, there are other countries that are very much in the game. Read on to learn more.

Speaking of learning, Google just dropped a treasure trove of courses (many of them are free). If you are really interested in keeping pace with the developments in AI, you have to spend some time learning.

Table of Contents

Hope you enjoy this power packed edition!

PS: If you want to unleash the power of AI agents to grow your business, setup time speak to me, here»

GPU Chips and AI Talent- Who is winning now?

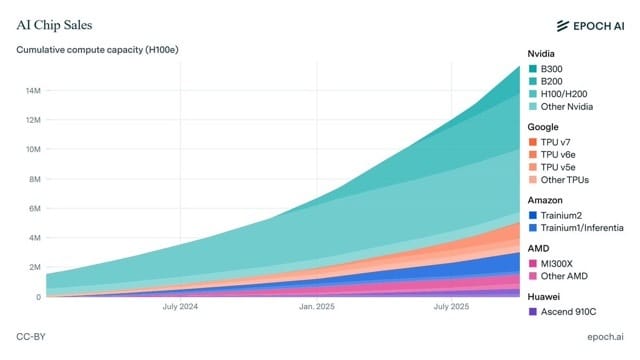

Global AI compute capacity has crossed 15 million H100-equivalents according to Epoch AI research. That figure should reset how the AI race is discussed.

A new AI chip sales explorer released by them now tracks where this compute originates. Nvidia, Google, Amazon, AMD, and Huawei are covered in one public dataset. It is clear that NVIDIA is the leader for now.

NVIDIA Leads new chip sales (from EPOCH AI)

One shift stands out. Nvidia’s B300 GPU now drives most AI chip revenue. H100s contribute under 10 percent.

B300 is driving majority of the revenue

Spending estimates rely on earnings calls, disclosures, analyst notes, and media reporting. The assumptions are conservative by design. Even then, the resource footprint is extreme.

The power requirements are enormous

Before servers or data centers are counted, these chips alone would draw over 10 gigawatts. That is roughly twice New York City’s average power consumption.

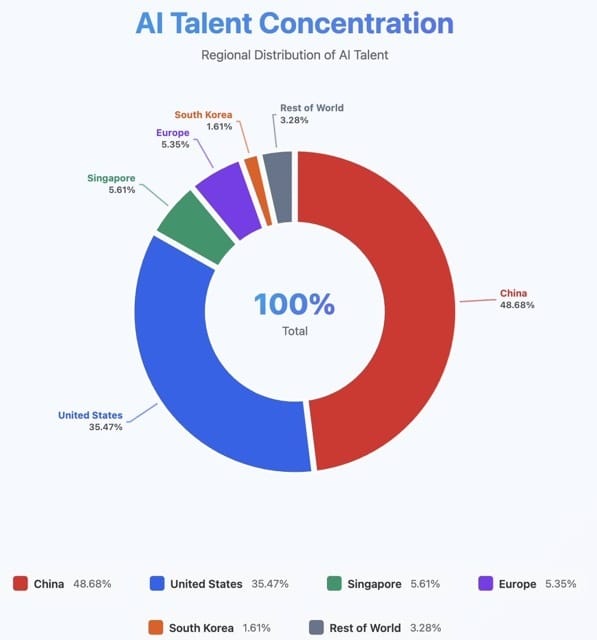

Now step away from compute. Look at talent.

A separate analysis of global AI talent density tells a different story. This thread by @bookworkengr on X is worth bookmarking.

Demographics shape outcomes. Compute is overrated in a research-driven phase. The distribution is uneven. China now exceeds the United States in AI talent density.

Singapore, despite its size, matches all of Europe. That helps explain why global labs keep opening there.

Talent density tells an interesting tale

Beijing has the highest talent concentration worldwide. Haidian district alone surpasses San Francisco’s Cerebral Valley. More top-tier labs operate there than in all of San Francisco. MoonShot, MiniMax, Zhipu AI, ByteDance SEED, and others cluster tightly in that area.

China has three metro regions with research output comparable to the entire Bay Area. Each contributes more than 10 percent of global AI research. All three also host dense robotics ecosystems (which is not surprising).

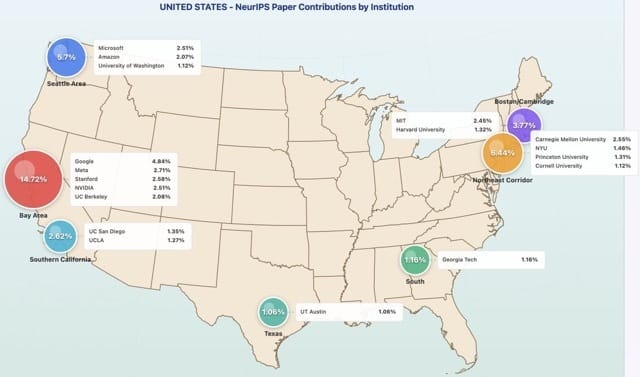

The United States has one such cluster. The San Francisco Bay Area.

A common objection that appears is that US labs publish less, so impact looks understated. OpenAI and Anthropic are usually cited as examples. That argument cuts both ways. Chinese foundation labs also under-publish. DeepSeek, MiniMax, MoonShot, and Z AI run hundreds of researchers.

Their paper counts (that was used to create these talent maps), reflect only a fraction of experiments. Robotics firms publish even less. Some corporate labs publish heavily. Alibaba, ByteDance SEED, and Tencent on one side. Google, Microsoft, Amazon, Salesforce, and Nvidia on the other. These largely balance out.

The real difference sits upstream. China’s university research culture runs deep. Other Asian universities like NUS, NTU, and KAIST are scaling fast (these are based in Singapore and South Korea).

The signal is consistent. Compute buys speed. Demographics set direction.

So what?

Implications for countries like the UAE and India are clear- talent AND infrastructure investment is required to even enter the AI race. That work should have started a decade or two ago for power. But it is not too late!

Sources

The ChatGPT moment for physical AI is here.

Jensen Huang made a bold claim at CES 2026: "The ChatGPT moment for physical AI is here."

Robots that think and act on their own just became real. The Big Announcements from Jensen:

- Vera Rubin supercomputer replaces the old Blackwell system. It thinks 5 times faster. It costs 10 times less to run. Ships in late 2026.

- Live robot demos on stage. Real robots moved around like Star Wars droids. Partners include Agility and Boston Dynamics.

- Alpamayo AI for cars thinks like a human driver. The 2026 Mercedes-Benz CLA uses it now. Mercedes calls it the safest car in the world.

- Open-source models for robots and self-driving cars. Anyone can use and improve them.

The Vision:

Everything that moves will run itself. Cars, robots, and machines will make their own decisions.

Physical AI isn't coming. It's here. Jensen's message is clear. The robot age started now. Industries will transform fast. The future isn't just digital anymore. It's now physical as well.

xAI’s $20B Scale Bet on AI Infrastructure and Products

A fundraising round just turned into a major strategic pivot; investors are funding infrastructure, products, and global reach.

🔹 Key insight 1: xAI upsizes its Series E to $20B

xAI closed an upsized Series E funding round and surpassed its original $15 billion target. The $20 billion raise signals strong investor conviction in next-gen AI development. A mix of institutional and strategic players participated in the round.

🔹 Key insight 2: Funding supports compute and global footprint

Part of the new capital will expand data center infrastructure anchored on the Colossus I and II footprint. xAI ended 2025 with more than one million H100 GPU equivalents across its systems. The focus is on scaling large cluster compute capacity to support advanced models and products.

🔹 Key insight 3: Grok product family evolves

Grok 4 series models trained on high-scale infrastructure have been deployed; Grok 5 is in training.

Grok Voice, a low latency conversational agent, supports many languages and runs on mobile apps and connected vehicles.

Grok Imagine tackles fast image and video generation tasks.

🔹 Key insight 4: User reach and integration matter

xAI’s ecosystem reportedly reaches around 600 million monthly active users (this is on X and Tesla combined). This scale positions it as a challenger in consumer and enterprise AI product markets. Integration across platforms boosts real-time data, multimodal reasoning, and reach.

🔹 Key insight 5: New capital to fuel research and deployment

The company said the funding will accelerate infrastructure build-out, speed product deployment, and support research initiatives aligned with its long-term mission.

Learn AI: Google Launches Skills website

I hope you guys don’t sleep on this one! Google skills website has launched a number of free courses on AI that can and will transform your AI learning curve. Whatever be your role or level of current understanding of AI.

We keep talking about up-skilling in this age of AI and when companies like Google drop free resources, you have to take advantage of it.

Check out the courses here:

X posts that caught my eye.

Tobi Lutke (Shopify CEO) is shipping code prolifically

Robots are already working alongside humans in China