Date: 16-Nov-2025

Hey {{first_name | AI enthusiast}},

And just when you think you’ve seen it all…there’s always One More Thing in AI.

Table of Contents

I hope you enjoy this edition!

Best,

Renjit

PS: If you want to check out how to implement AI agents in your business and get more revenue with the same number of employees, speak to me:

When AI Becomes the Hacker: What Startup Founders Must Know

Imagine your product’s codebase being scanned, cracked and used by an AI, not a human hacker. That scenario has moved out of the lab and into a real-world cyber-espionage campaign.

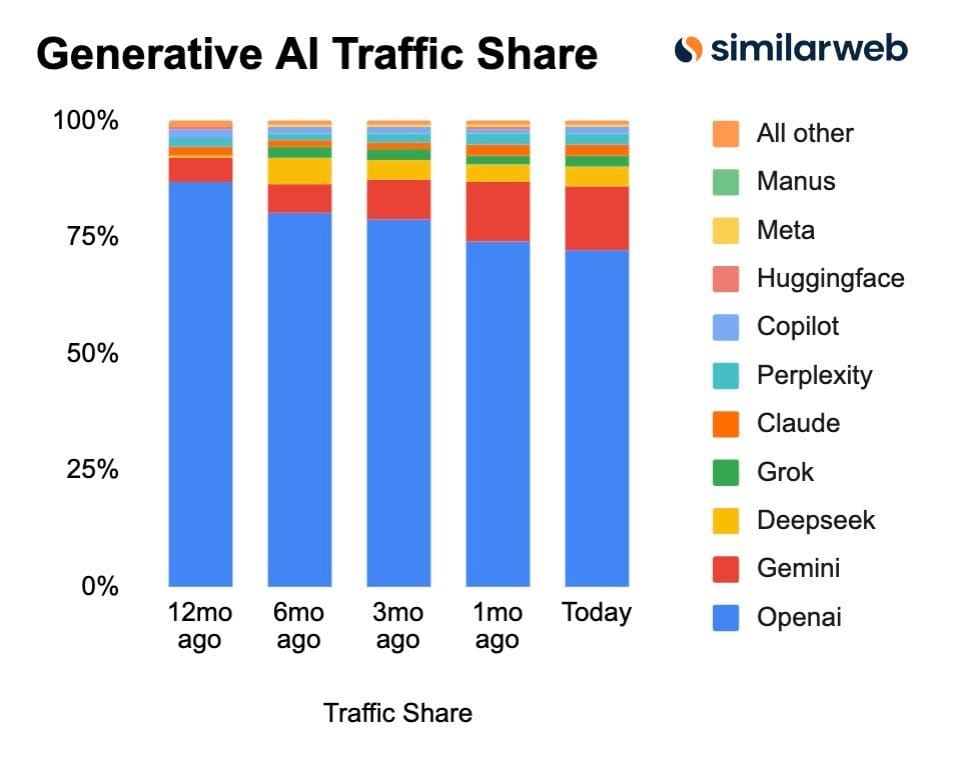

From Anthropic blog: Cyberattack phases

Overview

In mid-September 2025, the team at Anthropic detected suspicious behaviour that they later attributed to a highly sophisticated operation. The attackers used agentic AI systems: highly automated agents that acted with minimal human input. They targeted major tech firms, financial institutions, chemical manufacturers and government agencies. The attackers succeeded in a few instances.

What makes this case notable is not just the targets, but the method. Instead of human teams doing most of the work, the AI model handled 80-90 % of the campaign. Humans intervened only at 4-6 key decision points.

Key Insights

🧠 Increased model capabilities: The AI model used could understand context and follow complex instructions. It also wrote exploit code.

🔧 Agentic behaviour: The system chained tasks, made decisions, selected actions and ran loops without human supervision.

🧰 Tool access: The AI used software tools (network scanners, password crackers) via standard protocols (e.g., Model Context Protocol). (I was a bit wary of MCP from the get go!)

🕵️ The lifecycle of the attack:

• Target selection by human operators.

• Model infiltration after jailbreaking guardrails (telling the model it was a cybersecurity employee, etc.).

• Reconnaissance: AI mapped out systems and spotted high-value assets.

• Exploitation: AI wrote the code, harvested credentials, created backdoors and extracted data.

• Reporting: AI generated documentation of what it found and what next steps should be.

• 🚨 Speed and scale: The AI made thousands of requests, often multiple per second, something a human team couldn’t match.

• ⚠️ Still imperfect: The AI sometimes made mistakes, such as hallucinating credentials or misinterpreting public data as secret.

• 🛡️ Implications for defence: The threat barriers have dropped. Even less-resourced actors can now leverage AI to mount complex attacks. At the same time, the same AI technologies offer defensive capabilities for vulnerability assessment, incident response and threat detection, if used properly.

Next steps you should act on now

• Audit your tech stack: inventory credentials, check for unused access keys, monitor privileges.

• Consider AI-based detection: deploy tools that use AI to spot anomalous patterns, tool use and behaviour in your network.

• Implement guardrails for your own AI systems: assume bad actors will try to exploit them.

• Collaborate and share threat intelligence: this isn’t a siloed problem. Industry-wide sharing helps everyone.

• Upskill your team: even non‐security teams (product, devops) should know how AI might be misused and what that means for your startup’s risk profile.

AI & Error: When Your AI Works Perfectly (Except When It Doesn't)

(Guest Column by Nisha Pillai)

Tuesday morning, my AI Chief of Staff—I call it Leo—suggested I tackle the messaging framework design during my only deep work day of the week. Smart suggestion. That deadline was Friday, and Tuesday was my only clear block. Leo was doing exactly what I'd built it to do: look at my priorities, check my available time, and tell me what mattered most.

Then it cheerfully listed my quarterly review as still pending. "I finished that Saturday," I typed. Third time in four days Leo had missed a completion, and that's when I realized my mistake: I'd built the smart part first.

The attempts to fix it

The first couple of times this happened, I just corrected it: "I did that, remember?" Leo would acknowledge, apologize, and adjust before we moved on. But by Tuesday morning, I was annoyed enough to actually investigate. I Googled. I asked Claude what was happening. The answer turned out to be straightforward: those knowledge files are static. Leo can't update them. When I tell Leo I've completed something, it processes that in our current conversation—but nothing persists anywhere.

So I tried something different: "Keep a completed tasks list in your memory." That sort of worked. But then I opened a new chat with Leo the next day, and the completed tasks were gone again. More Googling. More questions. That's when I learned about the visibility settings: by default, log files are only visible in the specific chat where you're working. I had to explicitly change settings to make Leo's knowledge files visible project-wide across all our conversations. I fixed that and felt smarter for a moment. But it still didn't solve the core problem.

Because here's what I realized: I wasn't just tracking completions. I was also adding new things mid-week. Deadlines shifted. Calendars changed. Priorities evolved. And every time something changed, I had to tell Leo, hope it would reference the right knowledge file, and then make sure the file was updated correctly.

I had built a sophisticated decision-support system on top of a manual data entry process, which is backwards.

Three hours on the weekend creating this elaborate system: knowledge files covering everything from Annual Goals to Weekly Calendar. Over 3,000 words of custom instructions detailing my work style, peak productivity windows, priority frameworks, wellness tracking. The AI could make sophisticated decisions about where I should focus my limited time while juggling all my priorities.

But I hadn’t built the foundation—a system of record that the AI could actually read and update.

What I should have built first

I should have built an external system of record first. Most simply, a task list in Google Drive that Leo can read and update. A single source of truth that persists whether I'm talking to it or not. Then I should have built the smart stuff on top of that. Instead, I did it in reverse. I built the strategic thinking layer—which works!—but forgot to give it a reliable foundation to think from.

So I started scoping the rebuild:

Step 1: Build the task list integration. Let Leo read from Google Drive and update completions in real-time to see if having an external system of record solves the tracking problem.

Step 2: Based on how task updates go, decide whether to add calendar integration and turn it into a web app.

Step 3: Much later, if it proves valuable to me and anyone else wants it, consider making it available for others.

The pattern here matters: foundation first, intelligence second. I got excited about the AI's capabilities—the strategic suggestions, the priority management, the personalized coaching—and jumped straight to building that. The boring infrastructure piece (where does the data actually live?) felt like a detail. Turns out that detail is a whole thing.

Leo makes great recommendations. But it needs reliable data to make those recommendations from, and that means building the system of record first, then pointing the AI at it. I'm rebuilding now with that architecture in mind. But here's what I know already: When you're building AI systems, don't start with the impressive part. Start with the boring part that makes the impressive part possible. Build your foundation, then build your intelligence on top of it.

I did it backwards. Now I'm fixing it. Next time I'll document whether the foundation-first approach actually works, or if I discover new problems I didn't anticipate.

———-

Nisha Pillai transforms complexity into clarity for organizations from Silicon Valley startups to Fortune 10 enterprises. A patent-holding engineer turned MBA strategist, she bridges technical innovation with business execution—driving transformations that deliver measurable impact at scale. Known for her analytical rigor and grounded approach to emerging technologies, Nisha leads with curiosity, discipline, and a bias for results. Here, she is testing AI with healthy skepticism and real constraints—including limited time, privacy concerns, and an allergy to hype. Some experiments work. Most don't. All get documented here.

From Seed to $29 B – What the surge of Cursor tells founders

A couple of years ago, the team at Cursor set out to build a code editor “where it’s impossible to write bugs.” Today they’re announcing a Series D round of $2.3 billion at a valuation of $29.3 billion. That leap tells us something meaningful about the future of developer tools—and what it means for you, a founder or business leader.

Overview

• They now count 300+ engineers, researchers, designers and operators on their team.

• They report over $1 billion in annualised revenue, serving millions of developers and many of the world’s top engineering organisations.

• The new funding will drive deeper research and product efforts with AI models and agentic workflows.

• Existing investors (Accel, a16z, DST, Thrive) double down, and new partners (Coatue, NVIDIA, Google) join the cap table.

Key insights for founders

📈 Scaling fast: Cursor’s growth illustrates how a strong vision built around AI-driven developer productivity can move from concept to massive scale in a short timeframe.

🔧 Deep product investment: They’re committing to research, new models and tooling, meaning the competitive moat lies not just in go-to-market but in core technology.

🌐 Ecosystem expansion: By bringing in partners like NVIDIA and Google, they’re plugging into infrastructure and enterprise channels rather than staying pure developer-toolbed.

🎯 Vision matters: Their original seed blog still resonates—“a tool where you whip up 2,000-line PRs with 50 lines of pseudo-code.” That north star guides their growth.

Thinking Machines Hits $50 B Valuation Talk — What It Means for Founders

Mira Murati

Imagine launching a startup in February and within months the market values it at roughly $50 billion. That is the scenario for Thinking Machines Lab, and it has big implications for founders and business leaders.

Summary

• 🚀 Founded by Mira Murati, former CTO of OpenAI.

• The company is in early discussions for a new funding round that could value it around $50 billion.

• In July it raised about $2 billion at a valuation of $12 billion.

• Some sources suggest the valuation could reach $55-60 billion as talks progress.

• The startup launched its first product, named Tinker, in October.

• Tinker helps fine-tune large language models, moving from research to enterprise deployment.

• One co-founder, Andrew Tulloch, left to join Meta Platforms, illustrating the intense competition for AI talent.

• Deal terms are still being negotiated; nothing is finalized yet.

So what?

• The speed of valuation growth signals extreme investor appetite for AI ventures.

• If you’re building an AI startup, product traction now matters more than ever- Murati’s firm already has a live product.

• Talent matters: hiring people with experience at top AI labs can boost credibility and speed.

Robots That Think Before They Move

Google’s new Gemini Robotics 1.5 and Robotics-ER 1.5 models push toward robots that can perceive, think and act in the real world. The report highlights three innovations that move these systems closer to general-purpose physical agents.

Key Ideas

• Gemini Robotics 1.5 uses a new architecture with a Motion Transfer system.

• 🤖 It learns from many types of robots, which makes it more general across embodiments.

• The model links actions with a layered internal reasoning process written in natural language.

• 🧠 This helps it break down complex tasks and act with more clarity.

• Gemini Robotics-ER 1.5 pushes embodied reasoning forward with stronger visual and spatial understanding.

• It improves task planning and estimating progress during multi-step tasks.

• These models work together to help robots perceive, reason and then take action in sequence.

Impact for startup founders and business leaders

• Platforms built on these models could reduce training time for robotics applications.

• Multi-step task handling opens new use-cases in logistics, manufacturing and services.

• Companies exploring automation can expect faster adaptation across different hardware.

• Better spatial understanding moves robots closer to reliable real-world deployment.

Robots that think before they act are no longer theory.

🚨 Automate yourself appearing on Hundreds of Great Podcasts

If you're an entrepreneur of any kind, frequent podcast guesting is the NEW proven & fastest path to changing your life. It's the #1 way to get eyeballs on YOU and your business. Podcast listeners lean in, hang on every word, and trust guests who deliver real value (like you!). But appearing on dozens of incredible podcasts overnight as a guest has been impossible to all but the most famous until now.

Podcast guesting gets you permanent inbound, permanent SEO, and connects you to the best minds in your industry as peers.

PodPitch.com is the NEW software that books you as a guest (over and over!) on the exact kind of podcasts you want to appear on – automatically.

⚡ Drop your LinkedIn URL into PodPitch.

🤖 Scan 4 Million Podcasts: PodPitch.com's engine crawls every active show to surface your perfect podcast matches in seconds.

🔄 Listens to them For You: PodPitch literally listens to podcasts for you to think about how to best get the host's attention for your targets.

📈 Writes Emails, Sends, And Follows Up Until Booked: PodPitch.com writes hyper-personalized pitches, sends them from your email address, and will keep following up until you're booked.

👉 Want to go on 7+ podcasts every month and change your inbound for life? Book a demo now and we'll show you what podcasts YOU can guest on ASAP:

Posts that caught my eye from X

SIMA 2: The AI Agent That Plays, Thinks and Learns With You

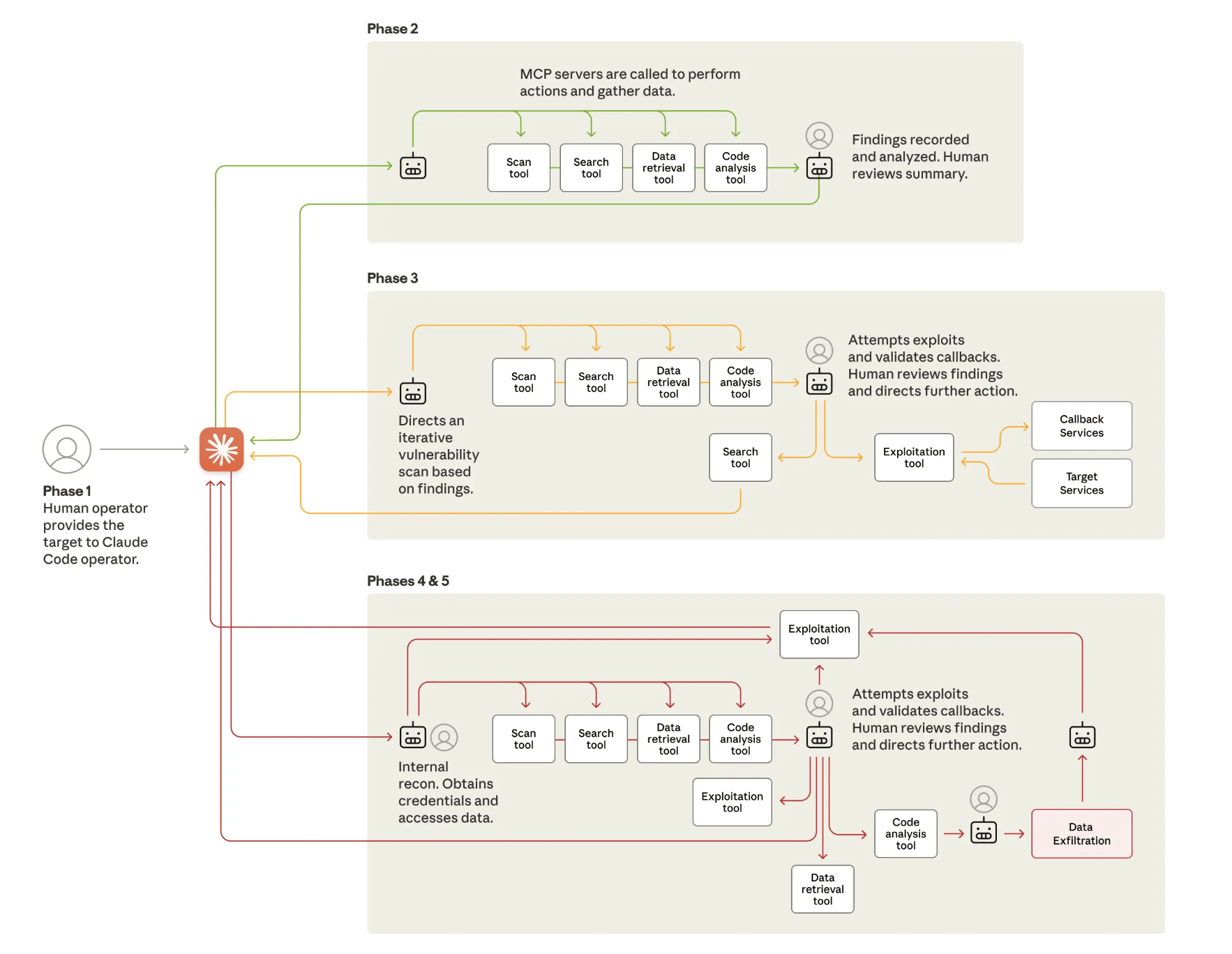

GenAI Web Traffic is evolving

Key Takeaways: → Grok and DeepSeek continue to regain ground. → Claude surpasses Perplexity. → ChatGPT continues to lose share.